FULLTEXT: PDF HTML REFERENCE: BibTeX EndNote DOI: 10.1109/ISCC.2010.5546636

Semantic Connections: Exploring and Manipulating Connections in Smart Spaces

Department of Industrial Design

Technische Universiteit Eindhoven

Eindhoven, Netherlands

{b.j.j.v.d.vlist, g.niezen, j.hu, l.m.g.feijs}@tue.nl

Abstract

In envisioned smart environments, enabled by ubiquitous computing technologies, electronic objects will be able to interconnect and interoperate. How will users of such smart environments make sense of the connections that are made and the information that is exchanged? This Internet of Things could have a life of its own, exchanging digital concepts and values between its members, having an understanding of each other and communicating in their own language. In this paper we report on an ongoing research project in the context of smart home environments. We discuss possibilities to represent this digital world in the physical reality we live in, by providing handles to control and clues to understand, build conceptual models of connections that exist or can be made. This is achieved by making semantic abstractions of low-level events and presenting them to users at a higher level, in a simplified fashion. Furthermore, we used an ontology to describe the low-level events, and used reasoning to infer high-level meaningful information. Although we are in the preliminary stages of our research, we consider it worthwhile to share and illustrate our findings by presenting a demonstrator, that implements our ideas in a home entertainment scenario.In envisioned smart environments, enabled by ubiquitous computing technologies, electronic objects will be able to interconnect and interoperate. How will users of such smart environments make sense of the connections that are made and the information that is exchanged? This Internet of Things could have a life of its own, exchanging digital concepts and values between its members, having an understanding of each other and communicating in their own language. In this paper we report on an ongoing research project in the context of smart home environments. We discuss possibilities to represent this digital world in the physical reality we live in, by providing handles to control and clues to understand, build conceptual models of connections that exist or can be made. This is achieved by making semantic abstractions of low-level events and presenting them to users at a higher level, in a simplified fashion. Furthermore, we used an ontology to describe the low-level events, and used reasoning to infer high-level meaningful information. Although we are in the preliminary stages of our research, we consider it worthwhile to share and illustrate our findings by presenting a demonstrator, that implements our ideas in a home entertainment scenario.

Keywords

I. Introduction

When computers and electronic products are moving to the background of an “Ambient Intelligent” environment as envisioned in [1], there is a risk of leaving its users clueless about what is going on. To tackle this risk, we need to look for novel interaction methods that can cope with the complexity of such hybrid environments, merging the physical with the digital.

Over a decade of research has lead to several interesting interaction paradigms such as Tangible Interaction (TI), augmented reality and mixed reality. Already in 1997, Ullmer and Ishii [2] introduced their vision on a new interaction paradigm for Ubiquitous Computing. By providing physical handles for digital information, users can use the senses and skills that people developed during millennia of interacting with physical objects [2].

Earlier work in the field shows solutions for simplifying configuration tasks of in-home networks by creating virtual “wires” between physical objects like memory cards [3] that can interconnect devices. Others propose to introduce tags, tokens and containers [4, 5] for tangible information exchange. Concepts like “pick-and-drop” [6] and “select-and-point” [7] are used to manage connections and data exchange between computers and networked devices.

The introduction of near field communication (NFC), using a near field channel like radio-frequency identification (RFID) or infrared communication, allows for direct manipulation of wireless network connections by means of proximal interactions [8]. This work, together with more recent work on Siftables [9], inspired us to develop a demonstrator to explore our “Semantic Connections” concept.

What sets our work apart from many of the earlier Tangible User Interface (TUI) concepts is our focus on connections. Instead of giving digital information physical containers/representations as done in many TUIs, we allow for exploration and manipulation of the connections “carrying” digital information (pipelines instead of buckets). We see these connections as both real “physical” connections (e.g. wired or wireless connections that exist in the real world) and “mental” conceptual connections that seem to be there from a user’s perspective, and their context (which things they connect) is pivotal for their meaning. We aim to enable users to explore and make configurations on a high semantic level without bothering them with low-level details. We believe this can be achieved by making use of Semantic Web technologies and ontologies in an interoperability platform as proposed by the SOFIA1 project. For more details on our work on this, please refer to another paper submitted to this workshop entitled “From Events to Goals: Supporting Semantic Interaction in Smart Environments” [10].

This paper presents the first insights, results and a proposal for further research as part of the ongoing research in the context of the SOFIA project. SOFIA (Smart Objects For Intelligent Applications) is a European research project addressing the challenge of Artemis sub-programme 3 on Smart Environments. The overall goal of this project is to connect the physical world with the information world, by enabling and maintaining interoperability between electronic systems and devices. We are involved in the project, contributing by developing smart applications for the smart home environment, and by developing novel ways of user interaction. For users to truly benefit from smart environments it is necessary that users are able to make sense of such an environment. One way of facilitating this “sense making” is through design. Our contribution to the SOFIA project aims at developing theories and demonstrators, and investigating novel ways of user-interaction with the smart environment through interaction with smart objects in physical space. Design theory like product semantics will be utilized to find handles for these new interactions [11, 12].

II. Design Case

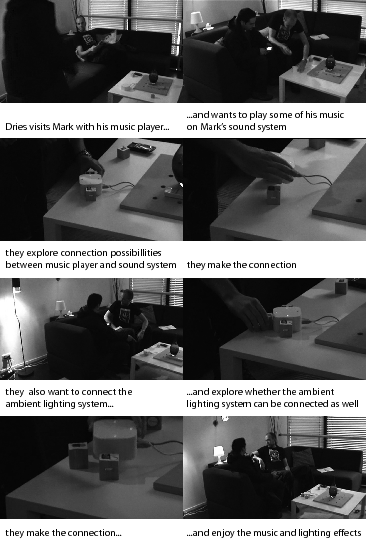

To illustrate the above mentioned concepts and ideas, a demonstrator was developed. The demonstrator is a tile-like interactive object that allows for both exploration of a smart space in terms of connections, and manipulation of these connections and information/data streams through direct manipulation. This is done by making simple spatial arrangements. The interaction tile visualises the various connections by enabling users to explore which objects are connected to one another and what can be connected to what. Coloured LED lighting and light dynamics visualize the connections and connection possibilities between the devices, by means of putting devices close to one of the four sides of the tile—a user can check whether there is a connection and if not, whether a connection is possible. By simply picking up the tile and shaking it, a user can make or break the connection between the devices present at the interaction tile. A video of the demonstrator is available2. Figure 1 shows an use case example of how the interaction tile can be used. The interaction tile supports the following interactions:

- viewing/exploring existing connections;

- viewing/exploring connection possibilities;

- making connections;

- breaking connections.

A. Design of the interaction tile

The design of the demonstrator is simple and straightforward. The tile-shape shows clear clues about orientation, e.g. what side should be placed up. The four sides clearly show four possibilities for placing objects near the tile; the size of each side restricts the number of objects one can place close to the tile. When an object is placed next to the tile, the LED’s gives immediate feedback when the object is recognized. When multiple objects are placed near the interaction tile, it will immediately show the connection possibilities (feed forward) by lighting colour and dynamics. Red colour means no connection and no connection possibility; green colour means there is an existing connection between the devices present and green pulsing means that a connection is possible. To indicate that the interaction tile did sense the first object a user places near, it shows a red colour at the side the object was detected. Placing a second, third and fourth object, the interaction tile shows the lighting effect corresponding to their connection capabilities. Picking up the tile and shaking it, will make or break the connection between the devices present at the interaction tile. The result of this action depends on the connection’s current state, and the devices present; if the tile shows a connection possibility, the action will result in a connection event. The same action performed when the tile shows an existing connection will break the connection.

B. Implementation of the interaction tile

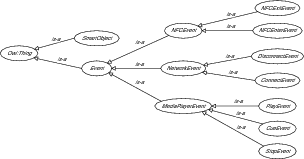

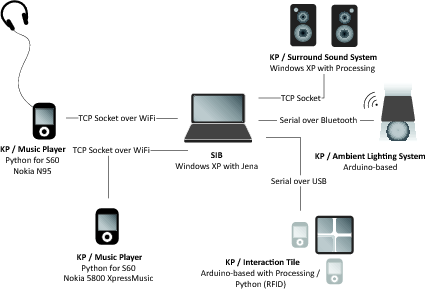

We want to enable users to explore and manipulate the connections within the smart space without having to bother with the lower-level complexity of the architecture. We envision this “user view” to be a simplified view (model) of the actual architecture of the smart space. Conceptually, the connections are carriers of information; in this case they carry music. Depending on the devices’ capabilities (e.g. audio/video input and/or output) and their compatibility (input to output, but no output to output), the interaction tile will show the connection possibilities. In our current demonstrator we do not distinguish between different types of data since we are only dealing with audio, but it will be inevitable in more complex scenarios. The interaction tile acts as an independent entity, inserting events and data into a triple store and querying when it needs information. The different types of events and the connections between smart objects and their related properties are described in an ontology. The ontology with “is-a” relationships indicated is shown in figure 2. Figure 3 shows the architecture of the current setup.

The interaction tile consists of the following components:

- Arduino board (Duemilanove);

- 13.56MHz RFID reader (ACS/MiFare);

- multi-colour LED’s;

- accelerometer;

- vibration motor;

- piezoelectric speaker;

- magnetic switches.

The demonstrator consists of the following devices:

- media players (Nokia N95 and 5800 XpressMusic);

- ambient lighting system (Arduino BT based homebrew lamp with RGB LEDs);

- sound system (speaker-set connected to notebook PC);

- notebook PC (acting as SIB3);

- interaction tile.

We implemented the demonstrator using the Jena Semantic Web framework, the Processing library for Java, and Python for S60. Every interaction with either the music player smart phones or the interaction tile results in an interaction event. A reasoner (Pellet) is used to reason about these low-level events in order to infer higher-level results. When the user shakes the tile to establish a connection, two NFCEnterEvent events (generated by the RFID reader inside the interaction tile) by two different devices not currently connected, will result in a new connectedTo relationship between the two devices. Because connectedTo is a symmetric relationship, the reasoner will automatically infer that a connection from device A to device B means that device B is also connected to device A. Since connectedTo is also an irreflexive property, it is not possible for a device to be connected to itself. A generatedBy relationship is also created between the event and the smart device that generated it, along with a timestamp and other event metadata.

III. Discussion

The current demonstrator helps us in defining more specific research questions and identifying key issues. Although simple, this demonstrator does show that making high-level semantic abstractions of low-level events has the potential to allow for semantic interaction in home-network configuration tasks.

Building this demonstrator also identified many possibilities for improvements and extensions. Like discussed before, it currently does not distinguish between different types of information exchanged nor does it show directional properties of the connections. By replacing the single LEDs by LED arrays we could show the dynamics of information flow. Using colour coding could show different types of connections (e.g. audio/video/text) or it could have separated modes of operation where it only shows one type of connection at the time. Additionally the cubes representing the mobile devices could easily be replaced by the real ones. When networking gets more complex and the connections among more than four devices should be explored/manipulated, multiple interaction tiles can easily be combined.

Besides these observations, the demonstrator shows that even the slightest and simple ways of giving feedback (LED colour, dynamics) can reveal meaningful information. To what extent users can extract meaningful information from the interactions with the smart space and how they can use it to build a suitable mental model for understanding yet need to be evaluated.

Recent work [9] shows the ongoing pursuit of making digital information and content physical, to allow for a natural way of accessing and controlling such data. Bridging the digital and physical has been a topic of research for over a decade. Although there is rich potential in tangible interaction concepts, shortcomings and tradeoffs are inevitable. One of the disadvantages of tangible computing is the introduction of (many) new physical objects into the environment. Leaving information in the digital world has advantages—we do not always want to have physical representations of all the information that we generate in the virtual world, which would mean overcrowding the physical space. A relatively unexplored approach is to use the existing physical (electronic) objects and devices in our interaction with the virtual world, going beyond using their (touch) screen and or buttons to interact with the information world. We propose to use the physicality of the objects e.g., their context, position and our usage of these object to generate new interaction concepts.

IV. Future work

This research is to be considered as work-in-progress. We will continue to develop research prototypes to investigate new interaction mechanisms. Furthermore we will need to identify whether this way of interaction can be generalized and applied in different contexts in the home. Further research will attempt to answer questions like: How do we handle increased complexity? How to reveal information about the information/content that is exchanged? How to provide control over the content? How can the design of physical objects (appearance and behaviour) enhance the creation of suitable mental models in users?

Acknowledgment

SOFIA is funded by the European Artemis programme under the subprogramme SP3 Smart environments and scalable digital service.

References

[1] E. Aarts and S. Marzano, The New Everyday: Views on Ambient Intelligence. Rotterdam, The Netherlands: 010 Publishers, 2003.

[2] H. Ishii and B. Ullmer, “Tangible bits: towards seamless interfaces between people, bits and atoms,” in CHI ’97: Proceedings of the SIGCHI conference on Human factors in computing systems. Atlanta, Georgia, United States: ACM, 1997, pp. 234–241.

[3] Y. Ayatsuka and J. Rekimoto, “tranSticks: physically manipulatable virtual connections,” in CHI ’05: Proceedings of the SIGCHI conference on Human factors in computing systems. Portland, Oregon, USA: ACM, 2005, pp. 251–260.

[4] R. Want, K. P. Fishkin, A. Gujar, and B. L. Harrison, “Bridging physical and virtual worlds with electronic tags,” in CHI ’99: Proceedings of the SIGCHI conference on Human factors in computing systems. New York, NY, USA: ACM, 1999, pp. 370–377.

[5] L. E. Holmquist, J. Redström, and P. Ljungstrand, “Token-based acces to digital information,” in HUC ’99: Proceedings of the 1st international symposium on Handheld and Ubiquitous Computing. London, UK: Springer-Verlag, 1999, pp. 234–245.

[6] J. Rekimoto, “Pick-and-drop: a direct manipulation technique for multiple computer environments,” in Proceedings of the 10th annual ACM symposium on User interface software and technology. Banff, Alberta, Canada: ACM, 1997, pp. 31–39.

[7] H. Lee, H. Jeong, J. Lee, K. Yeom, H. Shin, and J. Park, “Select-and-point: a novel interface for multi-device connection and control based on simple hand gestures,” in CHI ’08: extended abstracts on Human factors in computing systems. Florence, Italy: ACM, 2008, pp. 3357–3362.

[8] J. Rekimoto, Y. Ayatsuka, M. Kohno, and H. Oba, “Proximal interactions: A direct manipulation technique for wireless networking,” in Proceedings of Human-Computer Interaction; INTERACT’03. Amsterdam, the Netherlands: IOS Press, 2003, pp. 511—518.

[9] D. Merrill, J. Kalanithi, and P. Maes, “Siftables: towards sensor network user interfaces,” in Proceedings of the 1st international conference on Tangible and embedded interaction. Baton Rouge, Louisiana: ACM, 2007, pp. 75–78.

[10] G. Niezen, B. van der Vlist, J. Hu, and L. Feijs, “From events to goals: Supporting semantic interaction in smart environments,” in 1st Workshop on Semantic Interoperability for Smart Spaces (SISS2010), Riccione, Italy, jun 2010.

[11] K. Krippendorff, the semantic turn: a new foundation for design. Boca Raton: CRC Press, 2006.

[12] L. Feijs, “Commutative product semantics,” in Design and Semantics of Form and Movement (DeSForM 2009), 2009, pp. 12–19.