FULLTEXT: PDF HTML REFERENCE: BibTeX EndNote

DOI: 10.1109/ICCSIT.2009.5234489

Software Architecture Support for Biofeedback Based In-flight Music Systems

Technische Universiteit Eindhoven

Eindhoven, Netherlands

{hao.liu, j.hu, g.w.m. rauterberg }@tue.nl

Abstract In this paper, we present a software architecture support for biofeedback in-flight music systems to promote stress free air travels. Once the passenger sits in a seat of a flight, his/her bio signals are acquired via non intrusive sensors embedded in the seat and then are modeled into stress states. If the passenger is in a stress state, the system recommends a personalized stress reduction music playlist to the passenger to transfer him/her from the current stress state to the target comfort state; if the passenger is not in a stress state, the system recommends a personalized non stress induction music playlist keep him/her at comfort state. If the passenger does not accept the recommendation, he/she can browse the in-flight music system and select preferred music himself/herself.

Keywords in-flight music; biofeedback; healthy air travels; stress reduction.

I. Introduction

An individual's enjoyment of travel depends upon a predisposition to cope well with a variety of stresses. One common method of reducing negative stresses during air travel is to listen to stress reduction music available on the aircraft's in-flight entertainment system. In this paper, we present a software architecture support for biofeedback based in-flight music systems. Based on the passenger's current stress state and the expert knowledge of the current comfort state, the system recommends a personalized music playlist to transfer/keep the passenger to/at the comfort state. If the passenger declines the recommendation, he/she can select his/her preferred music via browsing the in-flight music system.

The rest of this paper is organized as follows. Related works which include current in-flight music systems, current music recommendation systems and current research on music stress reduction methods are investigated in section 2. Then, a new biofeedback based music system for stress free air travels is introduced and the software architecture to support it is presented in section 3. After that, the implementation of the biofeedback based in-flight music system is introduced in section 4. Conclusions and future works are presented in Section 5.

II. Related work

In this section, first, the state of the art of in-flight music systems is investigated and its limitations are discussed. Then, the current music recommendation systems are investigated. After that, the current research on music stress reduction methods is checked.

A. Current in-flight music systems

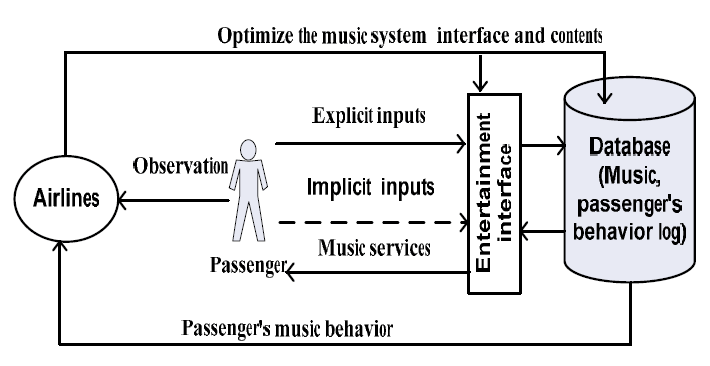

Liu investigated the state of the art of installed and commercially available in-flight entertainment systems [1]. Besides centralized music broadcasting, the current in-flight entertainment system also provides music on demand service. Figure 1 is the architecture of current on demand in-flight music systems. If the user wants to listen to music for recreation, he/she can explicitly browse and select desired pieces of music via the interactive controller or touch screen from the provided options step by step. Regularly if the available choices are many and the interaction design is poor, the passenger tends to get disoriented and not manage to find the most appealing music. What is more, the stress induced music which is selected by the passenger unconsciously may even increase instead of reduce his/her stress level. During this process, the airline may observe the passenger's music consumption behavior via questionnaires or passenger's music consumption behaviors, and then the airlines can optimize the music system interface and music contents to provide the passenger better music services. In this architecture, no passenger's implicit inputs are used to facilitate personalized music browsing and selection.

Figure 1. Architecture of the current in-flight music systems

B. Current music recommendation systems

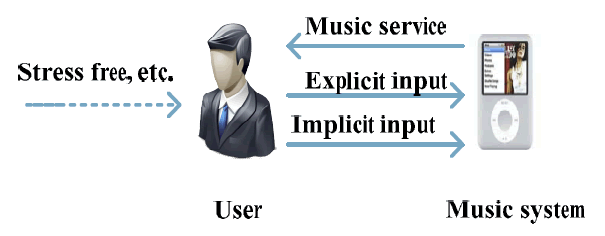

Currently, there are many research works relating to music recommendation in academic and commercial fields [2] [3] [4] [5]. These systems succeed in recommending users personalized music without or lesser "unnecessary" user explicit inputs. Figure 2 is the architecture of the current music recommendation systems. If the user wants to listen to his/her favorite music for recreation, he/she can explicitly browse and select desired pieces of music from the provided options. During this process, the music system may log on the user's music selection for later music recommendation [2][3]. The latest developments also see that contexts of use are used as implicit inputs of the user to facilitate context-aware or situation-aware music adaptations [4][5]. However, little attentions have been paid to recommend music to help the user to achieve comfort physical and psychological states.

Figure 2. Architecture of the current music recommendation systems

C. Current research on music stress reduction methods

There is a long literature involving the use of music for reducing the user's stress. Steelman looked at a number of studies of music's effect on relaxation where tempo was varied and concluded that tempos of 60 to 80 beats per minute reduce the stress response and induce relaxation, while tempos between 100 and 120 beats per minute stimulate the sympathetic nervous sys-tem [6]. Stratton and Zalanowski conducted experiments and found that preference, familiarity or past experiences with the music have an overriding effect on positive behavior change than other types of music [7]. Iwanaga found that people prefer music with tempo ranging from 70 to 100 per minute which is similar to that of adults' heart rate within

III. Software architecture support for biofeedback based in-flight music systems

In this section, we first introduce the objective of our in-flight music system, and then the main components and their functionalities of the system are presented, after that the software implementation architecture of the system is introduced.

A. Ojective of the biofeedback based in-flight music system

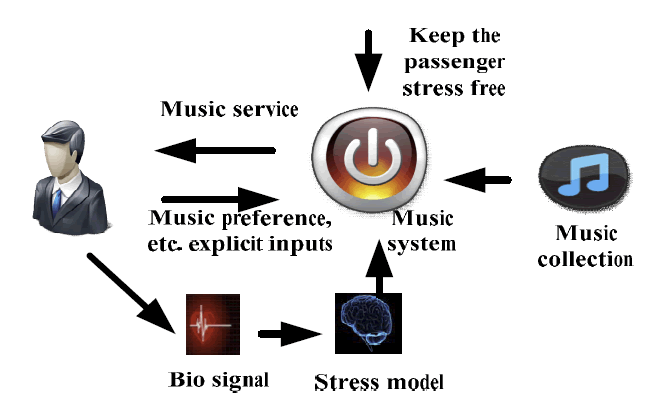

In figure 3, the passenger's stress state is modeled on his/her bio signals. His/her bio signal is acquired by the non-intrusive bio sensors embedded in the flight seat. The music collection is imported and updated by the airlines. Music can be described by the metadata. The passenger's music preference can be explicitly input by the user or learned implicitly by mining on the user's interactions with the system. The objective of the biofeedback based in-flight music system is to mediate between the passenger's stress state, music preference and available music to recommend user preferred music playlist to transfer him/her from the current stress state to the target comfort state or keep him/her at the comfort state.

Figure 3. System objective: mediate between the user's stress state, music, etc. to enable stress free air travels

B. Main software components and their functionalities of the biofeedback based in-flight music system

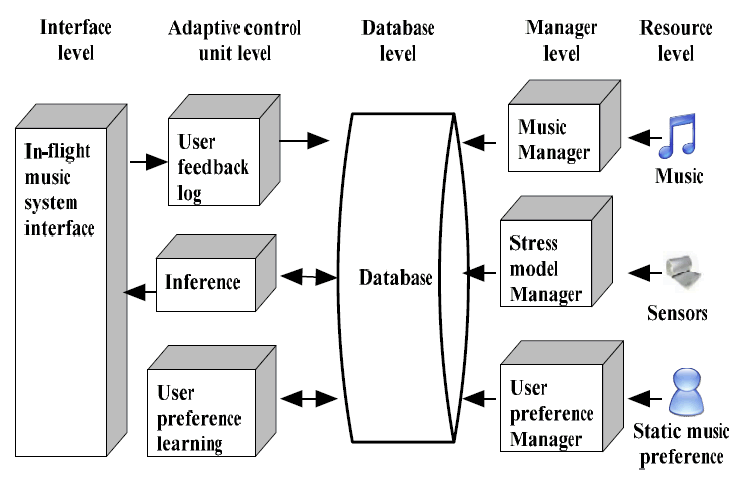

Figure 4 shows the main components that make up the biofeedback based in-flight music system. The whole architecture is divided into five abstraction levels from the functionality point of view. The lowest level is the resource level which contains music, bio sensors and user static music preference (the user's long commitments to certain genres of music). The second layer is the resource manager layer which includes music manager, stress model manager and user preference manager. The music manager is responsible for the music registration, un-registration, and etc. management functions. The stress model manager collects and models signals from sensors and updates stress information in database. It first acquires bio signals from sensors, and then based on these signals to model the passenger's current stress state and store this information to the database. The user preference manager collects, and updates the user's static music preference. The third layer is the database layer which constitutes by a database. It acts not only as a data repository, but also enables the layers and the components in the layers loosely coupled. This increases the flexibility of the whole architecture. For example, replacing or updating components in the resource manager layer does not affect the architecture performance unless data structures they store in the database changed. The fourth layer is the adaptive control unit layer which includes user feedback log, inference and user preference learning components. The user feedback log component is responsible for logging the user's feedback to the recommended music and the effects of the recommended music. The user preference learning manger is responsible for user preference learning based on user's past interactions with the recommended or self selected music. It forwards learned results to the database for storage. The inference is the core component of the whole architecture. It is used to mediate between the user's music preference, stress state and available music to recommend the passenger preferred stress reduction music to transfer him/her from the current stress state to the target comfortable state, or keep him/her at the comfortable state with non stress induction music. The fifth layer is the interface layer. The passenger interacts with the system interface to get music services.

Figure 4. Main components of the in-flight music system

C. Software implementation architecture of the biofeedback based in-flight music system

The software implementation architecture not only considers main components and their functionalities in figure 4, but also considers the browse/server architecture the system based. Here, the browse/server architecture means that the biofeedback based in-flight music system resides in a central server; the passenger can browse it via the intranet; for each passenger, there is a personalized session between his/her in-seat computer and the central server. In the following paragraphs, we first introduce the sub packages of our source code, and then introduce the classes in each sub packages, finally, introduce the deployment diagram.

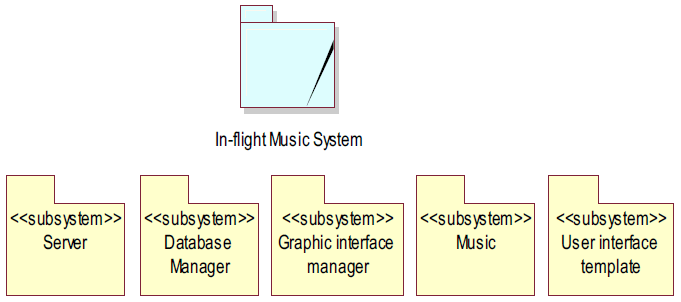

In figure 5, the software package of the in-flight music system is composed by five sub system packages. The server package includes software classes functioning as a web server and a music streaming server. Software classes in the database manager are responsible for database creating, updating, storing and retrieving. The graphic interface manager package includes classes which are responsible for generating all the graphic interfaces on the server side. The classes in the music package define the tracks, playlist, album, artist, etc. classes and the relations between them. They are the basic music items in the biofeedback based music system. The user interface template package includes all the in-flight music system user interface templates.

Figure 5. Package diagram of the biofeedback based in-flight music system

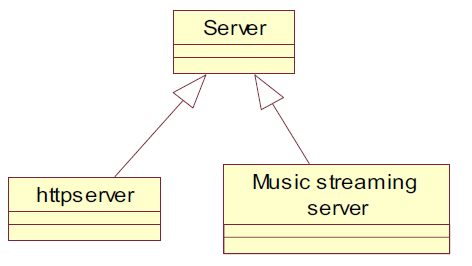

In the server package, its main classes include an http server and a music streaming server. An http server class is responsible for handling requests and responses of the web visits from the user. The music streaming server streams personalized audio streams to each on board passenger.

Figure 6. Class diagram of the server package

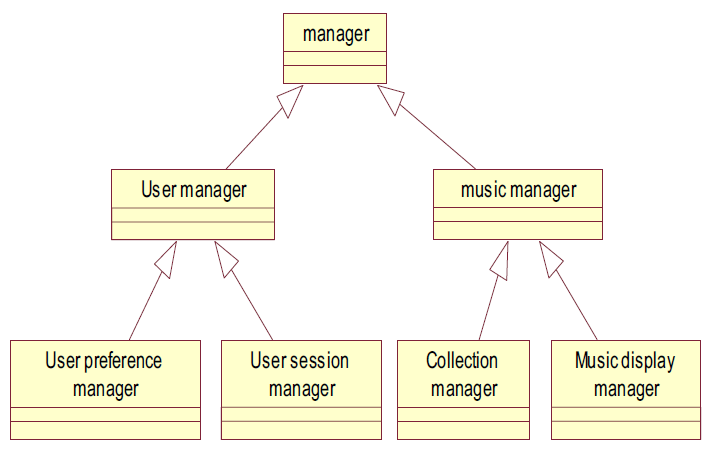

In the graphic interface manager package, the user preference manager class is responsible for user preference acquiring and updating. The user session manager sets unique sessions between each in-seat music system interface and the server. The collection manager imports the music collection Meta data information into the database. It is also responsible for deleting the music collection from the database. Music display manger displays all the music in the database visually in order to facilitate the administrator to manage the music collection.

Figure 7. Class diagram of the graphic interface manager package

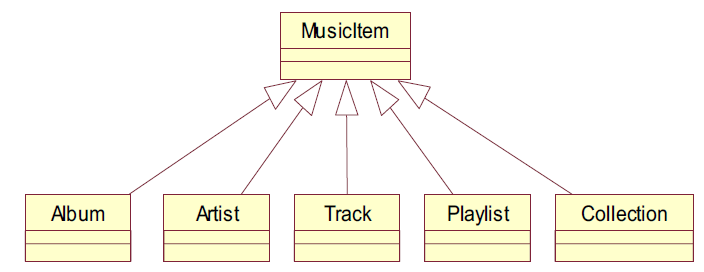

In the music package, the music item class has the basic music characteristic. The album, artist, track, playlist and collection inherit the music item to make the relations among them to be defined easily.

Figure 8. Class diagram of the music package

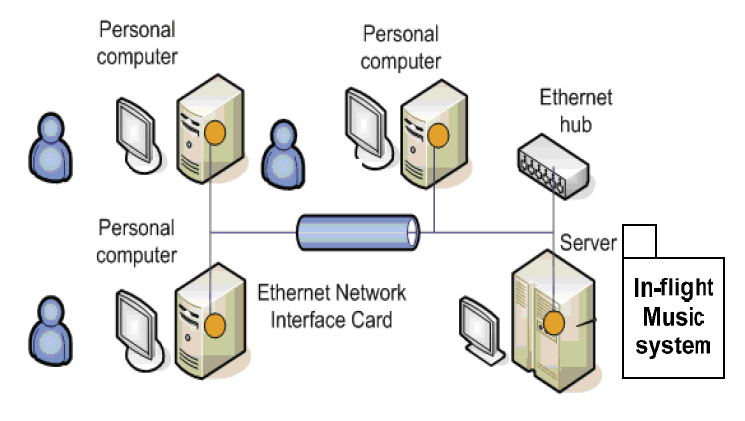

Figure 9 describes the deployment of the biofeedback based in-flight music system. After the above discussed classes are built and exported as executable software, it can be deployed in the central server. The passenger can visit it via the intranet.

Figure 9. Deployment of the in-flight music system

IV. Implementation

The in-flight music system is implemented with Java and Jamon. Jamon is used for implementing user interface templates. It is a text template engine for Java, useful for generating dynamic HTML, XML, or any text-based content [9].

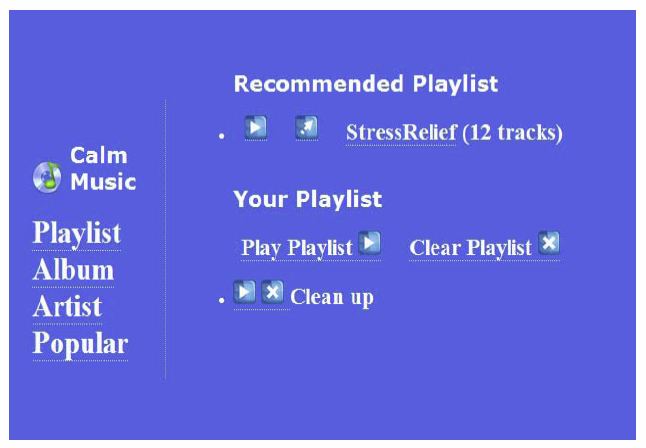

Figure 10 is a screen print of our integrated http server, music collection manager, user preference manager, music streaming server, music display manager, etc. Once the user runs the software and this interface pops up, the http servers is on. The user can transfer between different managers (music display manager, music collection manager and user preference manger) by clicking on the corresponding tabs. Figure 11 is a screen print of the music system interface. In the figure, a stress relief music playlist is recommended to the passenger according to his/her current stress state, target comfort state, music preference, etc. If the passenger accepts the recommendation, he/she can press the play button to enjoy; if the passenger declines the recommendation, he can browse the system by album, etc. to select the music himself/herself.

Figure 10. Integrated http server, music display manager, etc.

Figure 11. Music system interface

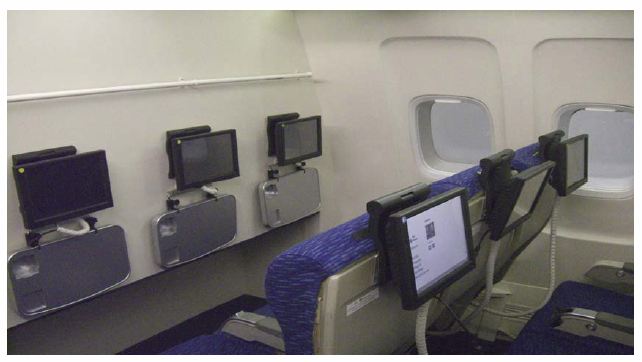

Figure 12 is a snapshot of the economic class in our test bed. The test bed is built to simulate long haul flight situations. For each seat, there is an in-seat computer to support a touch screen and a bio sensor which is embedded under the textile of the seat; each of the in-seat computers is connected to a central server via a switch. The passenger can browse the in-flight music system via the touch screen in front of him/her. Experiments to validate whether our biofeedback based in-flight music system can enable stress free air travels are going to be done in this test bed.

Figure 12. A snapshot of our test bed

V. Conclusions and future work

In-flight music systems play important roles in improving the passenger's comfort levels. However, the current in-flight music systems do not utilize the user's implicit inputs to facilitate him/her to find desired music. At the same time, the current music recommendation systems have made significant progress in recommending context-aware and personalized music from a large collection. However, little attentions have been paid to recommend music to help the user to achieve comfort physical and psychological states. In this paper, we present a software architecture support for bio feedback based music recommendation system. We starts by introducing the objective of the biofeedback based in-flight music system. And then, the components and their functionalities are presented. Finally the software implementation architecture is introduced. We have already implemented our bio feedback based music system. Currently, we are preparing to do the experiment to validate whether our system can enable stress free air travels.

Acknowledgment

This project is sponsored by the European Commission DG H.3 Research, Aeronautics Unit under the 6th Framework Programme, under contract Number: AST5-CT-2006-030958.

References

[1] H. Liu, "State of Art of In-flight Entertainment Systems and Office Work Infra structure". Deliverable 4.1 of European project Smart tEchnologies for stress free Air Travel, Technical university of Eindhoven, 2006.

[2] Pandora, "Personalized music service", retrieved March 1, 2009 from Pandora's Web site: http://www.pandora.com.

[3] NH. Liu, SW. Lai, CY. Chen and SJ. Hsieh, "Adaptive Music Recommendation Based on User Behavior in Time Slot", IJCSNS International Journal of Computer Science and Network Security, VOL9, No.2, pp 219-227, 2009.

[4]Jarno Seppänen, Jyri Huopaniemi, “Interactive and context-aware mobile music experiences”, Proc. of the 11th Int. Conference on Digital Audio Effects (DAFx-08), Espoo, Finland, September 1-4, 2008.

[5] J. Wang, MJT Reinders, J. Pouwelse, RL. Lagendijk, "Wi-Fi walkman: a wireless handhold that shares and recommends music on peer-to-peer networks" IS&T/SPIE Symposium on Electronic Imaging 2005.

[6] VM. Steelman, "Relaxing to the beat: music therapy in perioperative nursing". Today's OR Nurse, Vol. 13, pp.18-22,1991.

[7] VN. Stratton and AH. Zalanowski, "The Relationship between Music, Degree of Liking, and Self-Reported Relaxation", Journal of Music Therapy, 21(4): 184-92, 1984.

[8] M. Iwanaga, "Relationship between heart rate and preference for tempo of music". Percept Mot Skills, Oct, 81(2):435-40, 1995.

[9] Jamon, "User's guide", Retrieved March 2nd, 2009 from Jamon's website: http://www.jamon.org/UserGuide.html.